Making Robots Smarter with AI for Real-World Robots

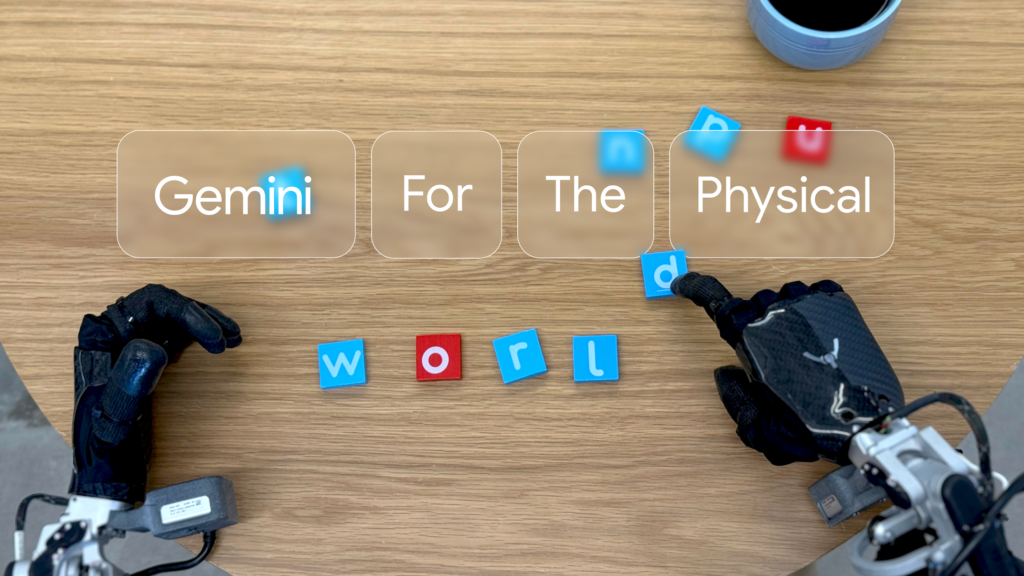

Google DeepMind has taken a major step in bringing artificial intelligence into our physical world. Until now, most AI worked only with digital information like text, images, and video. But real-world robots need more—they must see, understand, and take action in physical environments. This is now possible with Smarter Robots with Gemini AI

To solve this, DeepMind introduced Gemini Robotics, based on the powerful Gemini 2.0 AI model. This new system brings AI for real-world robots, helping machines perform physical tasks with human-like understanding and actions.

What Is Gemini Robotics?

Gemini Robotics is a vision-language-action (VLA) model. It combines visual input, natural language understanding, and physical actions to control robots. This means robots can now respond to what they see and hear—and act in the real world.

For example, it can fold origami, pack snacks, or adapt to new objects it hasn’t seen before. Thanks to its general learning skills, Gemini Robotics performs twice as well as earlier models in real-world tasks.

Key Features of Gemini Robotics

1. General Understanding

Gemini Robotics can handle new tasks, environments, and objects without needing special training for each. It adapts quickly to new situations—an important feature for AI in real-world robots.

2. Smooth Interaction

It responds to natural, spoken language and even understands different languages. The robot can change its behavior based on updated commands or new environmental changes. This allows better teamwork between humans and robots at home or work.

3. Fine Motor Skills

Gemini Robotics can do tasks that need careful hand movements—like picking up a mug or sealing a Ziploc bag. That level of dexterity has been hard for robots until now.

4. Works with All Kinds of Robots

Although trained on a specific robot called ALOHA 2, Gemini Robotics also works with other types like Franka arms (used in many labs) and Apptronik’s humanoid robot called Apollo.

Introducing Gemini Robotics-ER: Smarter Spatial Thinking

Alongside Gemini Robotics, DeepMind created Gemini Robotics-ER (Embodied Reasoning). This model improves how robots understand space, objects, and physical safety. It can plan movements, estimate positions, and even write code for robot actions.

Gemini Robotics-ER uses AI for real-world robots to think about how to pick up items, avoid obstacles, or follow safe paths. It learns from examples and can adjust its actions without needing constant programming.

Built with Safety in Mind

DeepMind focused heavily on safety. Robots powered by Gemini Robotics-ER are connected to safety controls that stop them from making dangerous moves. The AI can tell if an action is safe and will avoid unsafe behavior.

To help others build safer robots, DeepMind also created the ASIMOV dataset—a tool to test robot safety. They also developed a “Robot Constitution” (inspired by Asimov’s laws) to guide robot behavior using simple language rules.

Who’s Using It?

Partners like Apptronik, Boston Dynamics, and Agility Robotics are testing these models. These partnerships help improve the tech and prepare it for real-world use.

The Future of Helpful Robots

Gemini Robotics and Gemini Robotics-ER bring us closer to AI for real-world robots that are helpful, smart, and safe. With powerful AI, robots can soon assist us in homes, hospitals, factories, and beyond.

Read a blog on Gemini AI 2.5 https://blog.zarsco.com/gemini-2-5-pro-googles-most-intelligent-ai-model-yet/ and you can read such more blogs here